Attack of the depth buffer

In these exciting modern times, people get a lot of mileage out of their depth buffers. Long gone are the days where we only use depth buffers for visibility and stenciling, as we now make use of the depth buffer to reconstruct world-space or view-space position of our geometry at any given pixel. This can be a powerful performance optimization, since the alternative is to output position into a “fat” floating-point buffer. However it’s important to realize that using the depth buffer in such unconventional ways can impose new precision requirements, since complex functions like lighting attenuation and shadowing will depend on the accuracy of the value stored in your depth buffer. This is particularly important if you’re using a hardware depth buffer for reconstructing position, since the z/w value stored in it will be non-linear with respect to the view-space z value of the pixel. If you’re not familiar with any of this, there’s a good overview here by Steve Baker. The basic gist of it is that z/w will increase very quickly in the as you move away from the near-clip plane of your perspective projection, and for much of the area viewable by your camera you will have values >= 0.9 in your depth buffer. Consequently you’ll end up with a lot of precision for geometry that’s close to your camera, and very little for geometry that’s way in the back. This article from codermind has some mostly-accurate graphs that visualize the problem.

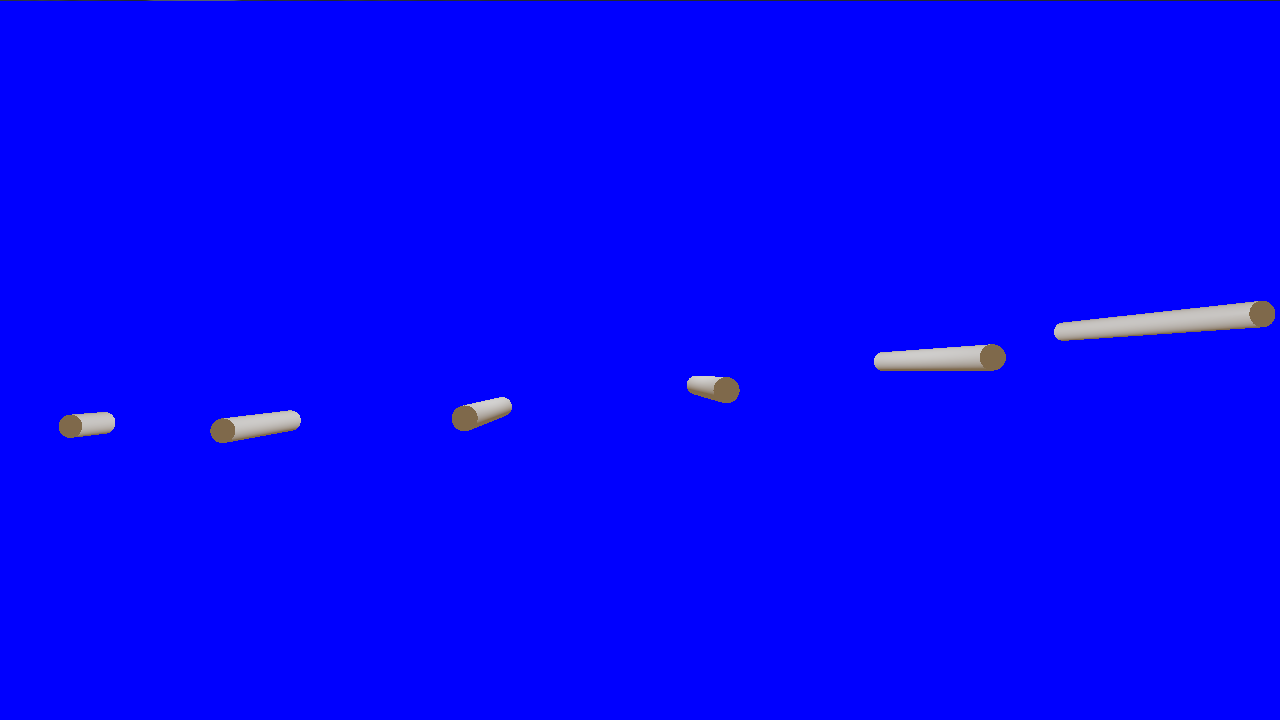

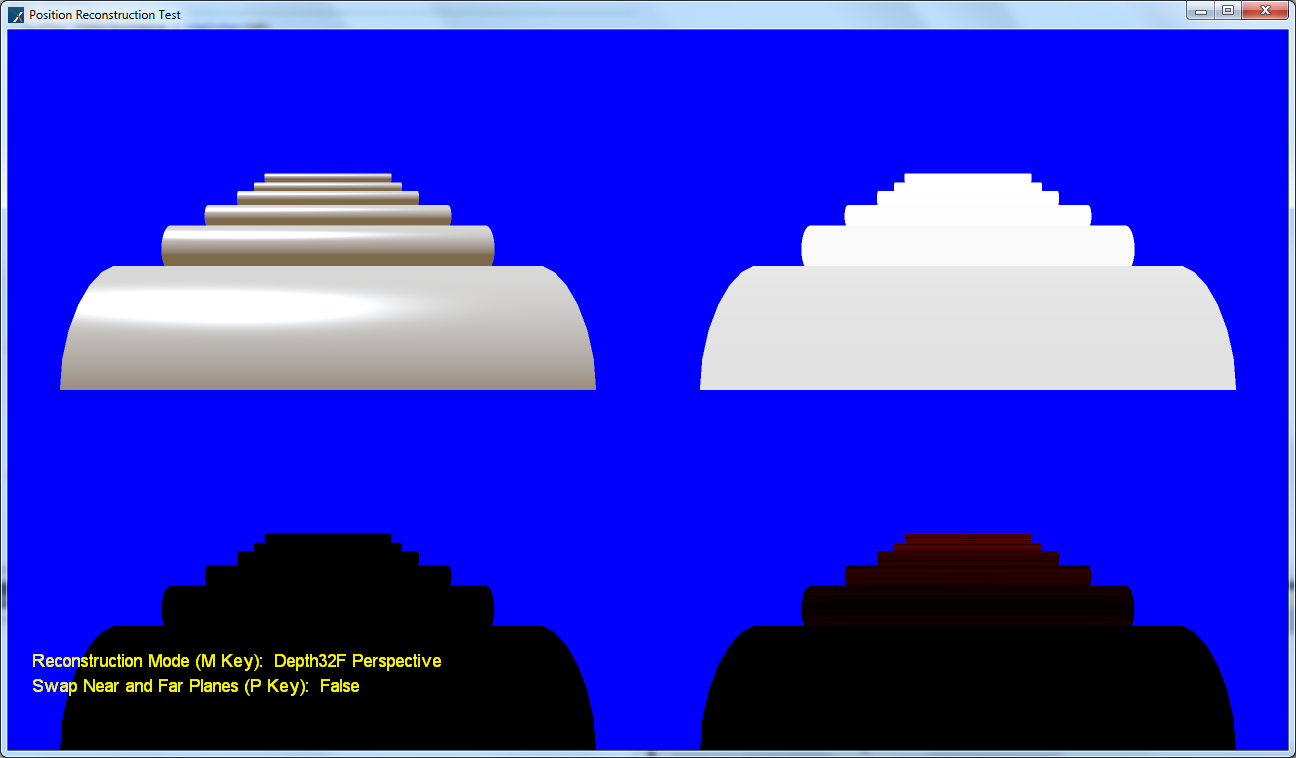

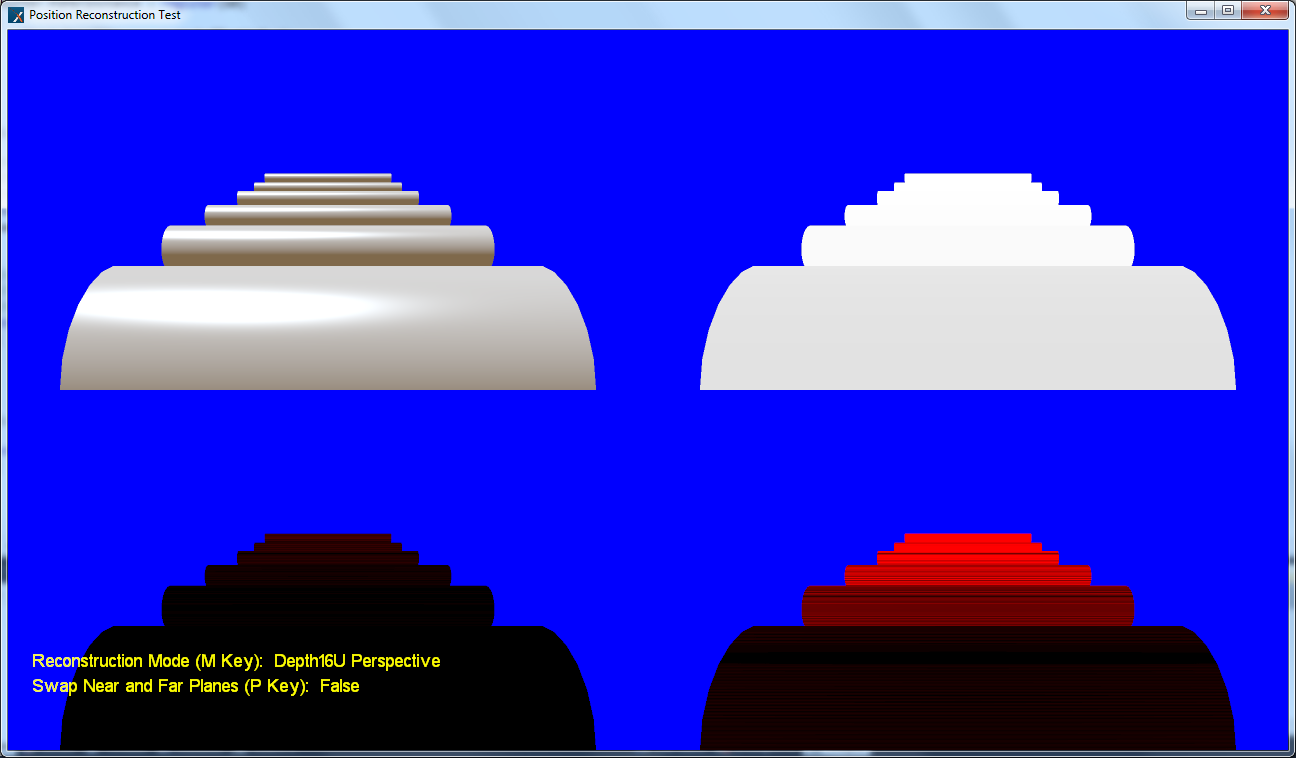

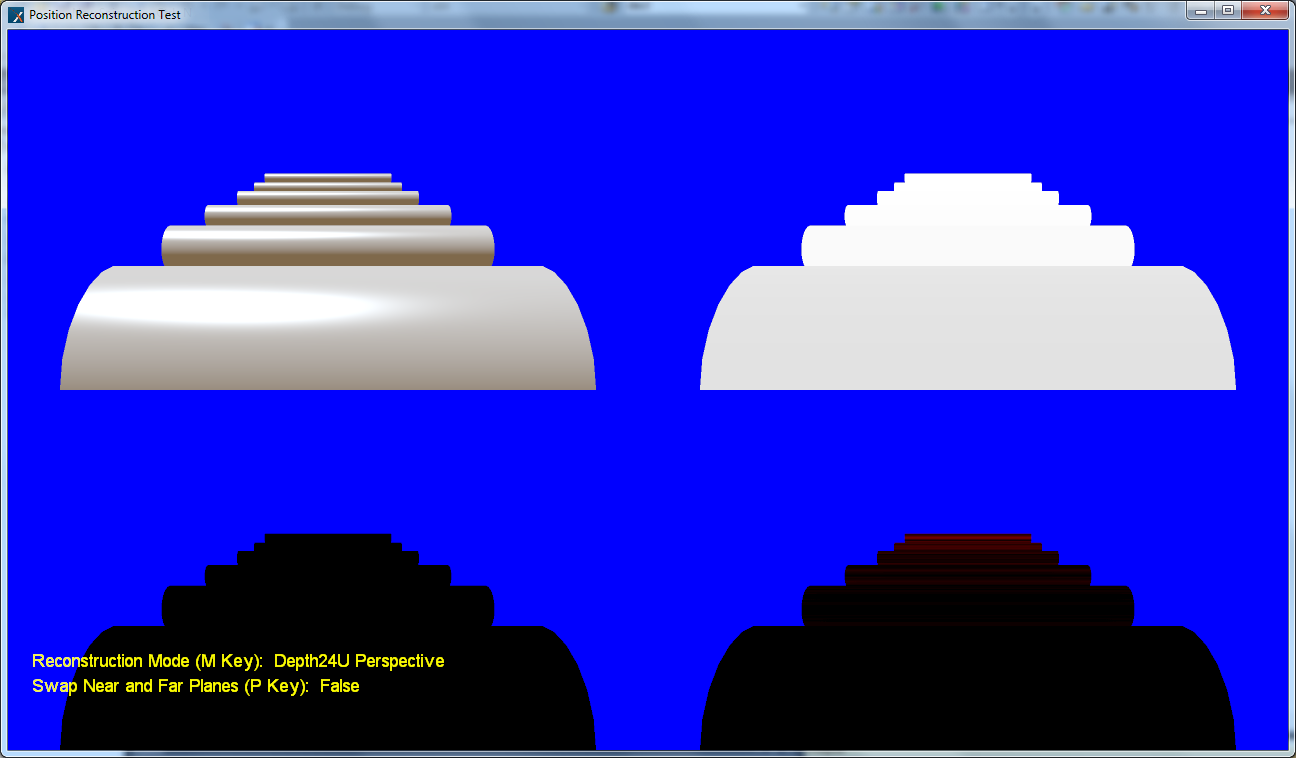

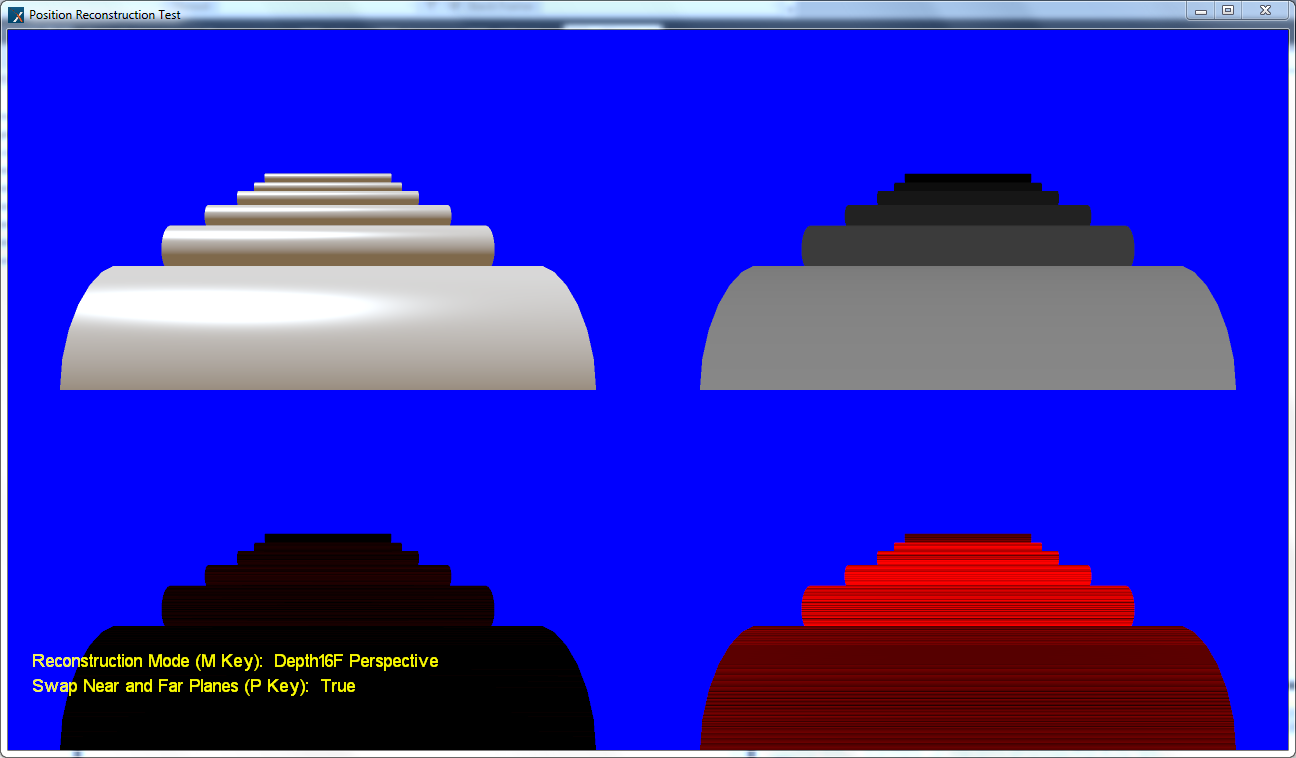

Recently I’ve been doing some research into different formats for storing depth, in order to get a solid idea of the amount of error I can expect. To do this I made DirectX11 app where I rendered a series of objects at various depths, and compared the position reconstructed from the depth buffer with a position interpolated from the vertex shader. This let me easily flip through different depth formats visualize the associated error. Here’s a front view and a side view of the test scene:

The cylinders are placed at depths of 5, 20, 40, 60, 80, and 100. The near-clip plane was set to 1, and the far-clip was set to 101.

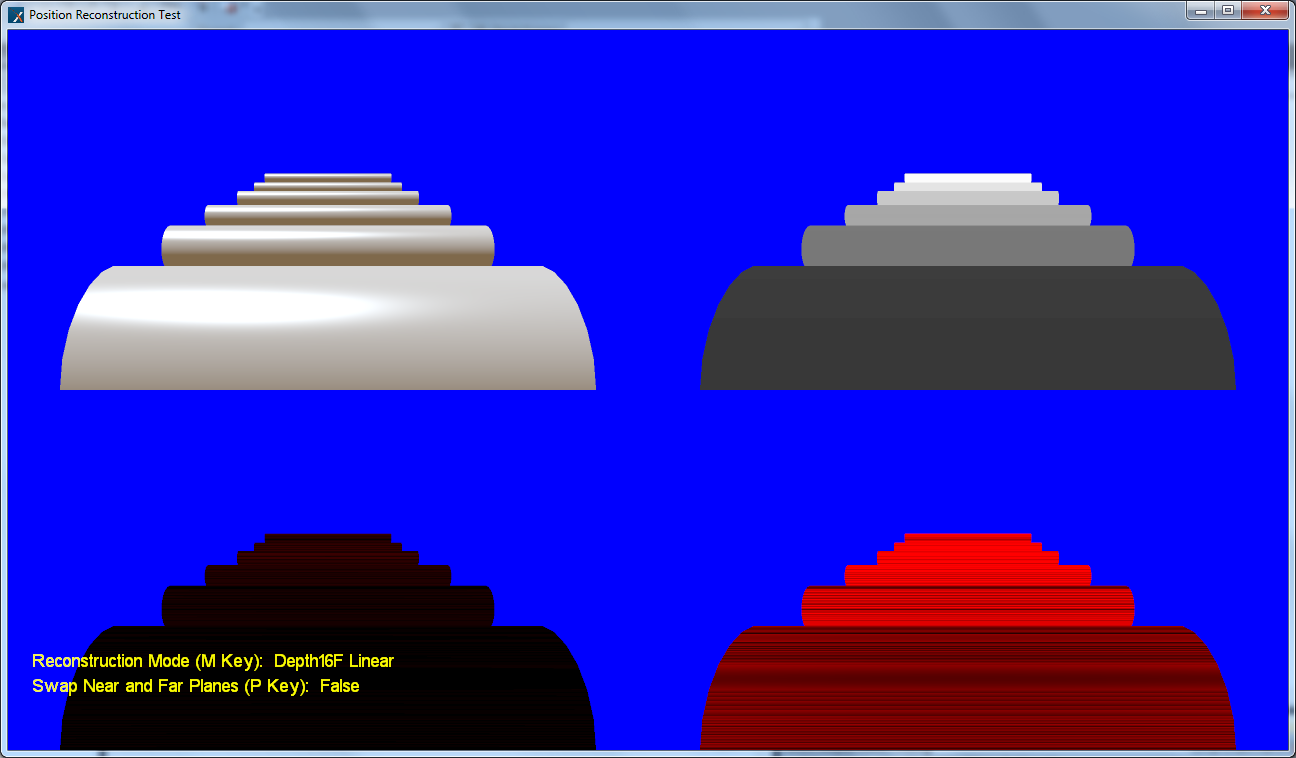

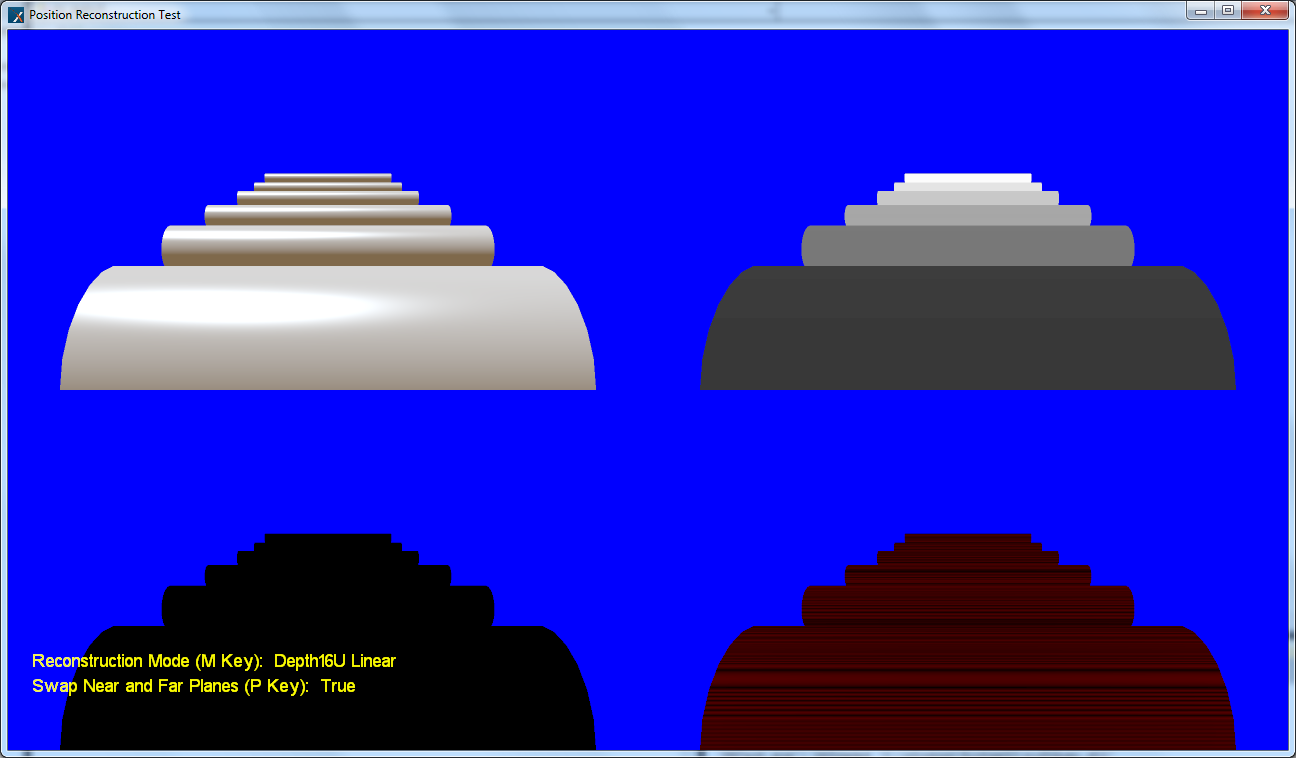

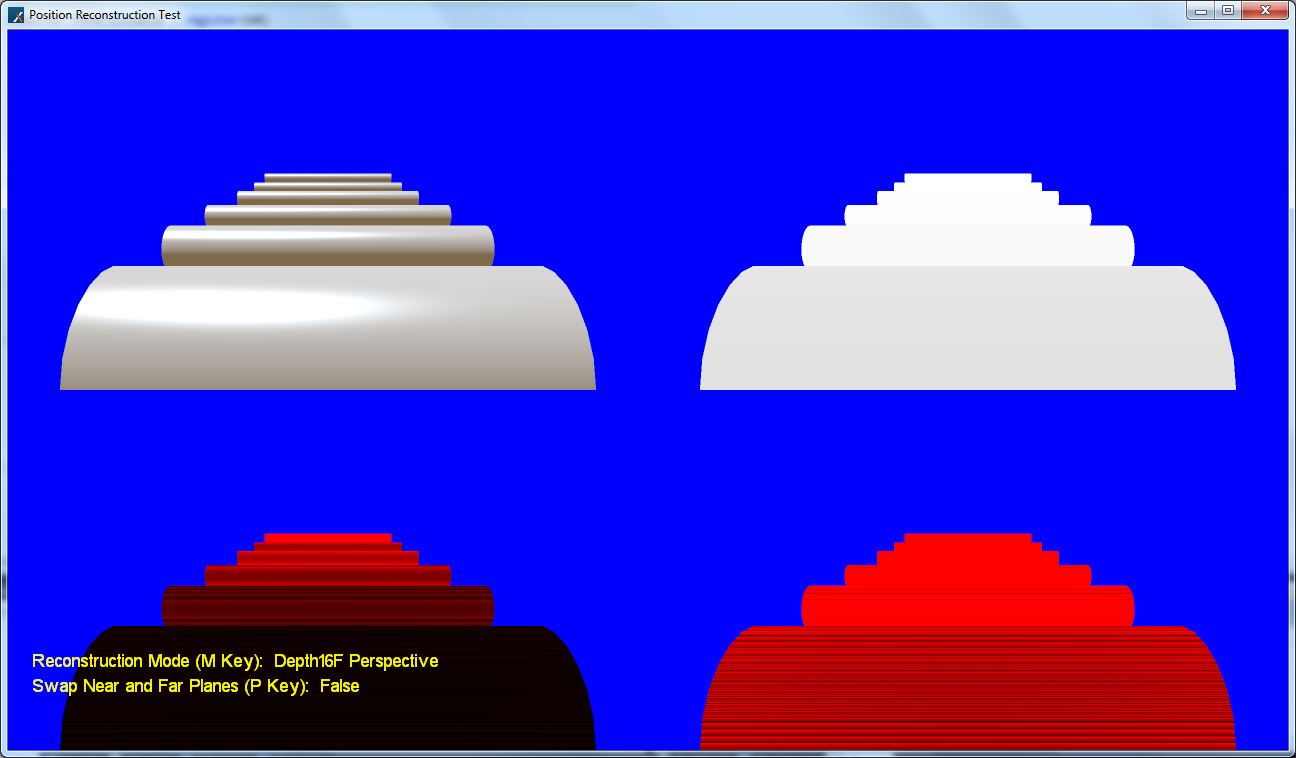

For an error metric, I calculated the difference between the reference position (interpolated view-space vertex position) and normalized it by dividing by the distance to the far clip plane. I also multiplied by 100, so that a fully red pixel represented a difference equivalent to 1% of the view distance. For a final output I put the shaded and lit scene in the top-left corner, the sampled depth in the top right, the error in the bottom left, and error * 100 in the bottom right.

For all formats marked “Linear Z”, the depth was calculated by taking view-space Z and dividing by the distance to the far-clip plane. Position was reconstructed using the method decribed here. For formats marked “Perspective Z/W”, the depth was calculated by interpolating the z and w components of the clip-space position and then dividing in the pixel shader. Position was reconstructed by first reconstructing view-space Z from Z/W using values derived from the projection matrix. For formats marked “1 - Perspective Z/W”, the near and far plane values were flipped when creating the perspective projection matrix. This effectively stores 1 - z/w in the depth buffer. More on that in #9.

So without further rambling, let’s look at some results:

1. Linear Z, 16-bit floating point

So things are not so good on our first try. We get significant errors along the entire visible range with this format, with the error increasing as we get towards the far-clip plane. This makes sense, considering that a floating-point value has more precision closer to 0.0 than it does closer to 1.0.

2. Linear Z, 32-bit floating point

Ahh, much nicer. No visible error at all. It’s pretty clear that if you’re going to manually write depth to a render target, this is a good way to go. Storing into a 32-bit UINT would probably have even better results due to an even distribution of precision, but that format may not be available depending on your platform. In D3D11 you’d also have to add a tiny bit of packing/unpacking code since there’s no UNORM format.

Ahh, much nicer. No visible error at all. It’s pretty clear that if you’re going to manually write depth to a render target, this is a good way to go. Storing into a 32-bit UINT would probably have even better results due to an even distribution of precision, but that format may not be available depending on your platform. In D3D11 you’d also have to add a tiny bit of packing/unpacking code since there’s no UNORM format.

3. Linear Z, 16-bit UINT

For this image I output depth to a render target with the DXGI_FORMAT_R16_UNORM format. As you can see it still has errors, but they’re significantly decreased compared to a 16-bit floating point. It seems to me that if you were going to restrict yourself to 16 bits for depth, this is a way to go.

4. Perspective Z/W, 16-bit floating point

This is easily the worst format out of everything I tested. You’re at a disadvantage right off the bat just from using 16-bits instead of 32, and you also compound that with the non-linear distribution of precision that occurs from storing perspective depth. Then on top of that, you’re encoding to floating point which gives you even worse precision for geometry that’s far from the camera. The results are not pretty…don’t use this!

5. Perspective Z/W, 32-bit floating point

This one isn’t so bad compared to using a 16-bit float, but there’s still error at higher depth values.

6. Perspective Z/W, 16-bit UINT

I used a normal render target for this in my test app, but it should be mostly equivalent to sampling from a 16-bit depth buffer. As you’d expect, quite a bit of error once you move away from the near clip plane.

7. Perspective Z/W, 24-bit UINT

This is the most common depth buffer format, and in my sample app I actually sampled the hardware depth-stencil buffer created from the first rendering pass. Compared to some of the alternatives this really isn’t terrible, and a lot of people have shipped awesome-looking games with this format. The maximum error towards the back is ~0.005%. If the distance to your far plane is very high, the error can be pretty significant.

8. Position, 16-bit floating point

For this format, I just output view-space position straight to a DXGI_FORMAT_R16G16B16A16_FLOAT render target. The only thing this format has going for it is convenience and speed of reconstruction…all you have to do is sample and you have position. In terms of accuracy, the amount of error is pretty close to what you get from storing linear depth in a 16-bit float. All in all…it’s a pretty bad choice.

9. 1 - Z/W, 16-bit floating point

This is where things get a bit interesting. Earlier I mentioned how floating-point values have more precision closer to 0.0 than they do closer to 1.0. It turns out that if you flip your near and far plane such so that you store 1 - z/w in the depth buffer, your two precision distribution issues will mostly cancel each other out. As far as I know this was first proposed by Humus in this Beyond3D thread. He later posted this short article, where elaborated on some of the issues brought up in that thread. As you can see he’s quite right: flipping the clip planes gives significantly improved results. They’re still not great, but clearly we’re getting somewhere.

10. 1 - Z/W, 32-bit floating point

With a 32-bit float, flipping the planes gives us results similar to what we got when storing linear z. Not bad! In D3D10/D3D11 you can even use this format for a depth-stencil buffer…as long as you’re willing to either give up stencil or use 64 bits for depth.

The one format I would have liked to add to this list is a 24-bit float depth-stencil format. This format is available on consoles, and is even exposed in D3D9 as D3DFMT_D24FS8. However according to the caps spreadsheet that comes with DX SDK, only ATI 2000-series and up GPU’s actually support this format. In D3D10/D3D11 there doesn’t even appear to be an equivalent DXGI format, unless I’m missing something.

If there’s any other formats or optimizations out there that you think are worthwhile, please let me know so that I can add them to the test app! Also if you’d to play around with the test app, I’ve upload the source and binaries here. The project uses my new sample framework, which I still consider to be work-in-progress. However if you have any comments about the framework please let me know. I haven’t put in the time to make the components totally separable, but if people are interested then I will take some time to clean things up a bit.

EDIT: I also started a thread here on gamedev.net, to try to get some discussion going on the subject. Feel free to weigh in!

Comments:

Depth And Games « Sgt. Conker -

[…] “Blitzkrieg” Pettino fights with the depth buffer and Charles “One” Humphrey flags bits in […]

#### [default_ex]( "default_ex@live.com") -

The bit about D24FS8 worries me a bit. I was thinking of using that format to provide layers to non-opaque objects in my deferred rendering system following an observation from the real world (not often are non-opaque objects visible through each other). I was also considering using that format with stencil passes later in the render system to provide natural separation of depth layers in depth of field post processing, similar to what was described at GDC09 by the SO4 developers.

#### [Patrick](http://recreationstudios.blogspot.com "mccarthp@gmail.com") -

Have you looked at a Logarithmic Depth Buffer? I first heard about it on Ysaneya’s dev blog: http://www.gamedev.net/community/forums/mod/journal/journal.asp?jn=263350&reply_id=3513134 It is incredibly simple and it has fixed all z-buffer issues I’ve had in the past. It is amazing. I would definitely recommend trying it out.

#### [mpettineo](http://mynameismjp.wordpress.com/ "mpettineo@gmail.com") -

Yeah I did look into that a bit. It’s cool stuff, but I have two concerns: 1. It has artifacts for triangles that cross the near-clip plane. Ysaneya got around this by manually outputting depth from the pixel shader, but this is a non-option for me since it disables fast-z and early z-cull. It also means you can’t use multisampling, since you can only output a single depth value per fragment. 2. If you don’t store z/w, then I think you’ll run into all sorts of problems with z-cull and hardware optimizations since the z buffer will no longer be linear in screen space. But thank you for bringing this up! It’s always good to have more things to think about it.

#### [Michael Hansen](http://www.evofxstudio.net "michaelhansen555@msn.com") -

hello mp i love all your toturials you have ,, as of the new gamestudio 4.0 we can do this depth thing here are some code,,so you can test is out you can use custrom shaders if you only create a windows game 4.0 project delete the game.cs and here are the new class.. aswell handling the device now you have a floatpoint 128 bit hdr buffer if you have a directx 11 card and if you have a directx 10 or 10.1 you will get a floatpoint 64 bit hdr on xbox360 we will only get a float 32bit bit hdr buffer wicth is expandet to 64 bit in the xenon gpu so alot of stuff can be done here Best Regards Michael Hansen public partial class Form1 : Form { public EvoFxEngine EvoFxEngine; public bool InitializeGame() { EvoFxEngine = new EvoFxEngine(this.Panel3D.Handle); return true; } private int lastTickCount; private int frameCount; private float FrameRate; private SpriteBatch MySpriteBatch; private SpriteFont MySpriteFont; public void RenderScene() { int currentTickCount = Environment.TickCount; int ticks = currentTickCount - lastTickCount; if (ticks >= 1000) { FrameRate = (float)frameCount * 1000 / ticks; frameCount = 0; lastTickCount = currentTickCount; } EvoFxEngine.GraphicsDevice.Clear(Microsoft.Xna.Framework.Color.CornflowerBlue); EvoFxEngine.GraphicsDevice.Present(); frameCount++; } #region NativeMethods Class [System.Security.SuppressUnmanagedCodeSecurity] // We won’t use this maliciously [DllImport(“User32.dll”, CharSet = CharSet.Auto)] public static extern bool PeekMessage(out Message msg, IntPtr hWnd, uint messageFilterMin, uint messageFilterMax, uint flags); /// Windows Message [StructLayout(LayoutKind.Sequential)] public struct Message { public IntPtr hWnd; public IntPtr msg; public IntPtr wParam; public IntPtr lParam; public uint time; public System.Drawing.Point p; } public void OnApplicationIdle(object sender, EventArgs e) { while (this.AppStillIdle) { RenderScene(); } } private bool AppStillIdle { get { Message msg; return !PeekMessage(out msg, IntPtr.Zero, 0, 0, 0); } } #endregion ////////////////////////////////////////////////////////////////////// public class EvoFxEngine { private static GraphicsDevice graphicsDevice; public static GraphicsDevice GraphicsDevice { get { return graphicsDevice; } set { graphicsDevice = value; } } private GraphicsAdapter GraphicsAdapter; private GraphicsProfile GraphicsProfile; private PresentationParameters PresentationParameters; public EvoFxEngine(IntPtr ScreenHandle) { PresentationParameters = new PresentationParameters(); PresentationParameters.BackBufferFormat = SurfaceFormat.HdrBlendable; PresentationParameters.IsFullScreen = false; PresentationParameters.BackBufferWidth = 1280; PresentationParameters.BackBufferHeight = 720; PresentationParameters.DeviceWindowHandle = ScreenHandle; PresentationParameters.DepthStencilFormat = DepthFormat.Depth24; PresentationParameters.PresentationInterval = PresentInterval.Immediate; GraphicsProfile = GraphicsProfile.Reach; graphicsDevice = new GraphicsDevice(GraphicsAdapter.DefaultAdapter, GraphicsProfile, PresentationParameters); } }

#### []( "") -

DXGI_FORMAT includes: DXGI_FORMAT_D24_UNORM_S8_UINT http://msdn.microsoft.com/en-us/library/bb173059(VS.85).aspx

#### [Tex]( "ejted@hotmail.com") -

nice framework you have ! like the code for loading .X files… loading skinned .x files directly to dx11 would be nice to !

#### [Position From Depth 3: Back In The Habit « The Danger Zone](http://mynameismjp.wordpress.com/2010/09/05/position-from-depth-3/ "") -

[…] an actual working sample showing some of these techniques, you can have a look at the sample for my article on depth precision. Posted in Graphics, Programming. Leave a Comment […]

#### [Andon M. Coleman](https://www.facebook.com/andon.coleman "amcolema@eagle.fgcu.edu") -

One thing has been bugging me about this for years now… where are you getting a 16-bit **floating-point** perspective depth buffer from? In D3D and OpenGL the only 16-bit depth-renderable format is fixed-point (unorm). There is effectively only one portably exposed floating-point depth buffer format and that is 32-bit.

#### [SSAO на OpenGL ES 3.0 | WithTube](http://video.teletrunk.kz/?p=27 "") -

[…] на мой взгляд лучшая реализация Hemispherical SSAOSSAO | Game RenderingAttack of the depth buffer — различные представления z-буфераЛинейная алгебра […]

#### [Linear depth buffer my ass | Yosoygames](http://yosoygames.com.ar/wp/2014/01/linear-depth-buffer-my-ass/ "") -

[…] Attack of the depth buffer […]

#### [Steve](http://blog.selfshadow.com "sjh199@zepler.org") -

Did you give 24bit uint a go with 1 – z/w? You may find that you get the similar benefits.

#### [WillP]( "pearcewf@gmail.com") -

I’ve been using a 32-bit floating point depth buffer in my project for a while, and recently gave the 1 - Z / W 32-bit floating point option shown above a try. It did indeed give me better depth precision, even with increasing the distance between my near and far planes by a decent amount. My question is are there any reasons besides losing stencil or having to use the 64 bit depth/stencil option that this should NOT be used? I didn’t notice any difference in application performance, but was wondering if there are potential issues I may be overlooking by switching over and fully adopting this format into my engine. What would keep this usage pattern that gives better depth storage from becoming a norm (or is it already, and I just missed the boat)?

#### [Depth Precision Visualized – Nathan Reed's coding blog](http://www.reedbeta.com/blog/2015/07/03/depth-precision-visualized/ "") -

[…] open-access link available, unfortunately). It was more recently re-popularized in blog posts by Matt Pettineo and Brano Kemen, and by Emil Persson’s Creating Vast Game Worlds SIGGRAPH 2012 […]

#### [EO]( "dominicstreeter@hotmail.com") -

WARNING: The geometry is drawn twice to render the lighting in this sample there is no deferred lighting equation hidden here, this light is forward rendered. The sample does not demonstrate depth buffer position reconstruction for a light based model, resolving the view or world space position of the light on any part of the screen. The precision will vary between the fudged reconstruction here and a true reconstruction algorithms final precision. I have so many questions but rather than asking them I implore you to write the definitive baseline sample for depth reconstruction from a real light model so you can get the view and world space position and prove all the math. Your snippets tantalisingly offer the elements of a pipeline where every element is critical, in isolation they only become valuable if you get it perfect and it is very slow and grinding work coming from a forward background. I will never surrender to this depth buffers attack; but please help me. If the plea of common dev folk does not drive you perhaps that margin of error between algorithms and positions might do instead :)

#### [MJP](http://mynameismjp.wordpress.com/ "mpettineo@gmail.com") -

For most of the formats that were tested, I was manually generating the depth buffer outputting a depth value from a pixel shader to a render target. I don’t think that fp16 is a supported depth format for any GPU that’s I’ve ever worked with. I included it in the testing because when I wrote the article it was still fairly common for DX9 games to store depth in render targets in order to avoid relying on driver hacks.

#### [Reading list on Z-buffer precision | Light is beautiful](http://lousodrome.net/blog/light/2015/07/22/reading-list-on-z-buffer-precision/ "") -

[…] Attack of the depth buffer, 2010, by Matt Pettineo, on visualizing depth buffer error when using different buffer formats. […]

#### [How can I find the pixel space coordinates of a 3D point – Part 3 – The Depth Buffer – Nicolas Bertoa](https://nbertoa.wordpress.com/2017/01/21/how-can-i-find-the-pixel-space-coordinates-of-a-3d-point-part-3-the-depth-buffer/ "") -

[…] Attack of The Depth Buffer – Matt Petineo […]

#### [Reverse Z Cheat Sheet – Intrinsic](http://www.intrinsic-engine.com/reverse-z-cheat-sheet/ "") -

[…] https://mynameismjp.wordpress.com/2010/03/22/attack-of-the-depth-buffer/ […]

#### [What’s New in Metal 3 – Metal by Example](http://metalbyexample.com/new-in-metal-3/ "") -

[…] This post by Matt Pettineo has a good overview of the trade-offs of different approaches to depth buffer precision. You can use the embedded tool in this post by Theodor Mader to get a sense of how error is distributed when using 16-bit depth. […]