There's More Than One Way To Defer A Renderer

While the idea of deferred shading/deferred rendering isn’t quite as hot as it was year or two ago (OMG, Killzone 2 uses deferred rendering!), it’s still a cool idea that gets discussed rather often. People generally tend to be attracted to way a “pure” deferred renderer neatly and cleanly separates your geometry from your lighting, as well as the idea of being able to throw lights everywhere in their scene. However as anyone who’s done a little bit of research into the topic surely knows, it comes with a few drawbacks. The main ones being that for MSAA you need to individually light all your subsamples (which isn’t doable in D3D9), and also that for non-opaque objects you have use forward rendering anyway.

The neat thing about the concepts involved with deferred shading is that you’re not all locked into the typical “render depth+normals+diffuse+specular to a fat G-Buffer and then shade” approach. I’m not sure enough people are aware of this, and appreciate it. For example, you can just defer your shadow map calculations to gain the related performance and organization benefits, and then use standard forward rendering techniques for everything else. Or you can reconfigure the deferred lighting pipeline to gain back the ability to have multiple materials, or the ability to multisample without shading individual subsamples. Surely there are even more possibilities!

Recently while working on my own game, I was grappling with the issue of having my engine support more local light sources in a scene. I was using standard forward lighting with up to 3 lights per pass (which was fine), but I really wanted to keep my DrawPrimitives calls to a minium (due to how painful they can be on the 360). This was problem since I’m aggressively batching my mesh rendering using instancing, and sorting instances by which light affects them would cause by batches to increase. Thus, I was using 3 “global” light sources per frame. This has obvious drawbacks.

While I was thinking over solutions, I considered the importance of smaller local lights that are relatively far away in the scene. At further distances, it’s not necessarilly too important to have “correct” lighting. In fact, we basically just need something that’s the right color, makes the area brighter, and doesn’t shade surfaces facing away from the light source. So I thought: “I already have view-space depth…if I can calculate view-space normals I canget what I want by using a deferred pass”. So I did exactly this…and it didn’t work very well. The problem was that even though you can a calculate view-space normal from a depth value by calculating the partial derivatives and taking a cross product, the normals you calculate aren’t smoothly interpolated between vertices. So what you get is something that looks an awful lot like flat shading. Ewwwwwwwwwwww.

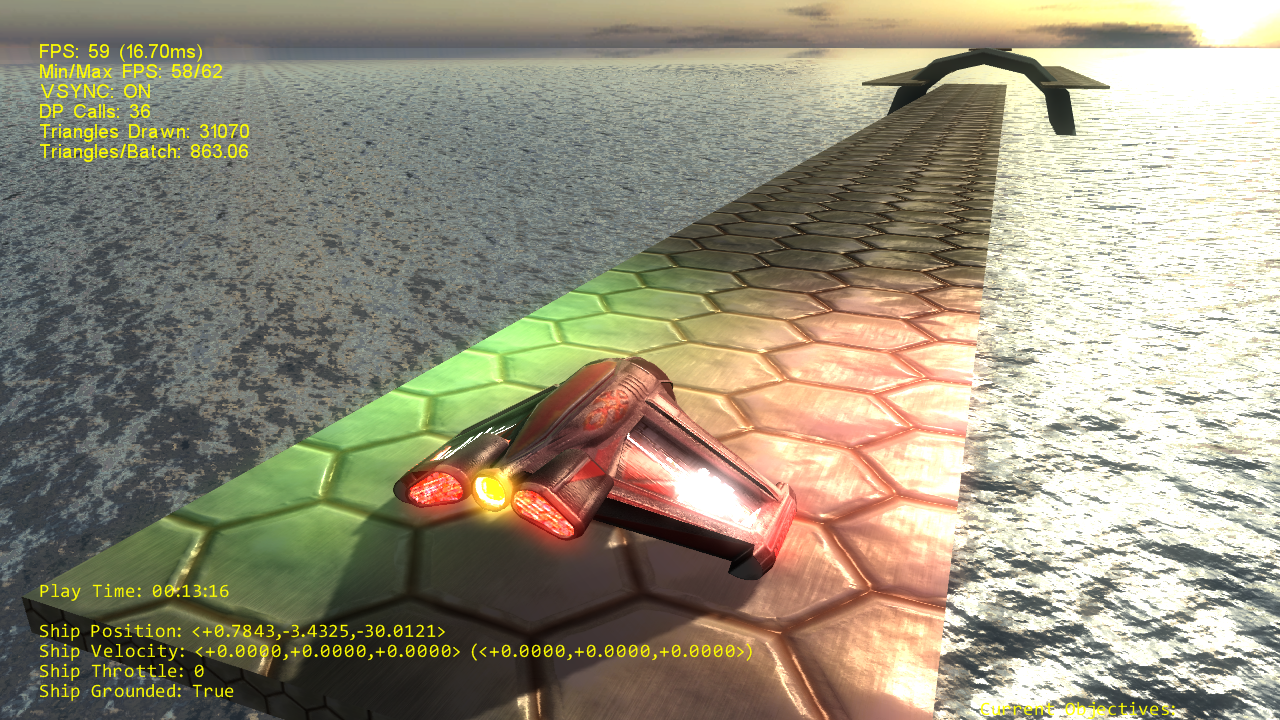

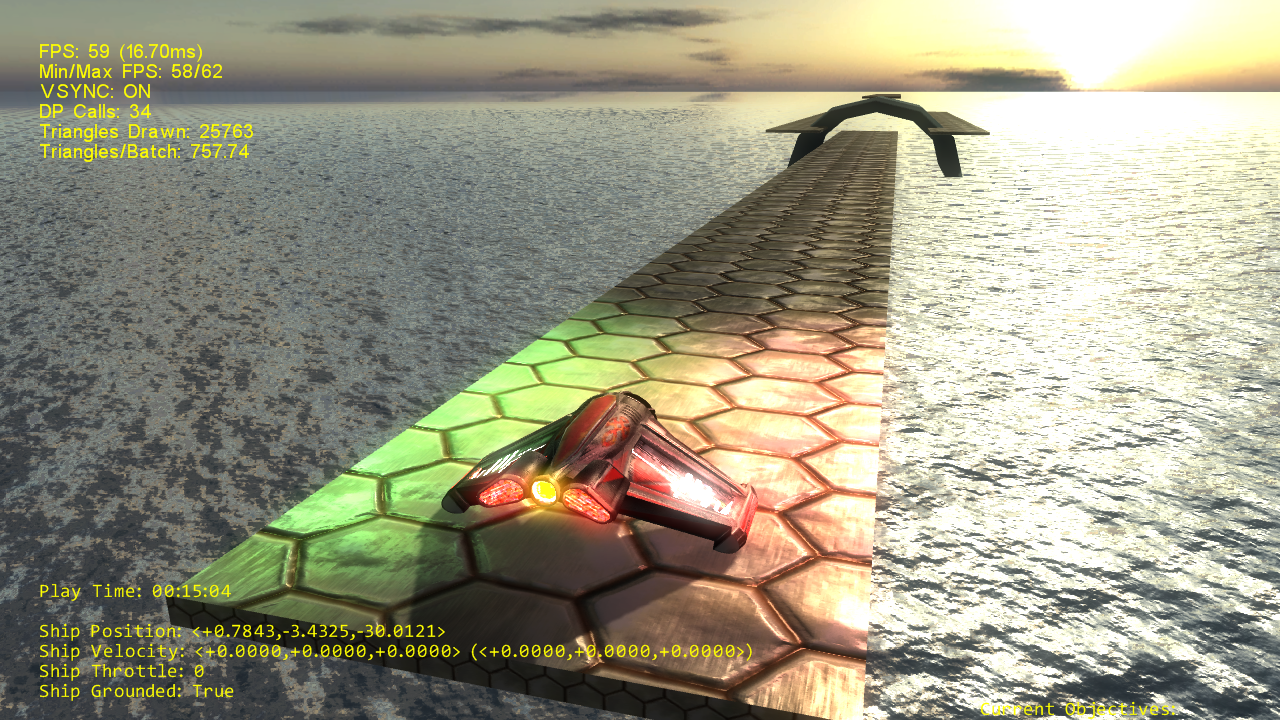

This lead to approach #2: in the depth-only pass, render to a RGBA16F surface instead of a R32F surface and render out depth + view-space normals as interpolated from the vertex normals. This worked much better! The only remaining issue (aside from the fact that I just hard-code a diffuse albedo and specular albedo), is that normal-maps aren’t used. However even with that those problems the results are still decent, as long as surface colors are primarily determined by your forward rendering pass and the local light are just “extra”. Here’s screenshots of a test scene with forward rendering, and then with the point lights deferred:

The results are clearly not as good as a full forward pass when you have them side-by-side, but I think they’re probably good enough…especially if I only use this technique for lights that are small or far-away. The trick is going to be transferring smoothly from deferred to forward, but that’s certainly doable.

One downside that came with this was that since I was just additively blending in the lights, I couldn’t use my beloved LogLuv encoding for HDR. My next-best option of the 360 was to normalize R10G10B10A2 to a range greater than [0,1]. I ended up having to normalize to [0,8] to get the dynamic range I wanted, and unfortunately this can give some visible banding in certain cases. And alternative I’ll have to explore is rendering just the point lights to an R10G10B10A2 buffer, and then sending this to my forward rendering pass to be sampled and added to the result. If I did this I could also use the light prepass approach, and gain back material parameters and proper MSAA for the point lights.

Anyway I’m not saying that what I’m doing is that particularly interesting or useful, I’m just trying to demonstrate that there are many possibilities to explore. It’s good to think out of the box every once in a while!