Bokeh II: The Sequel

After I finished the bokeh sample, there were a few remaining issues that I wanted to tackle before I was ready to call it “totally awesome” and move on with my life.

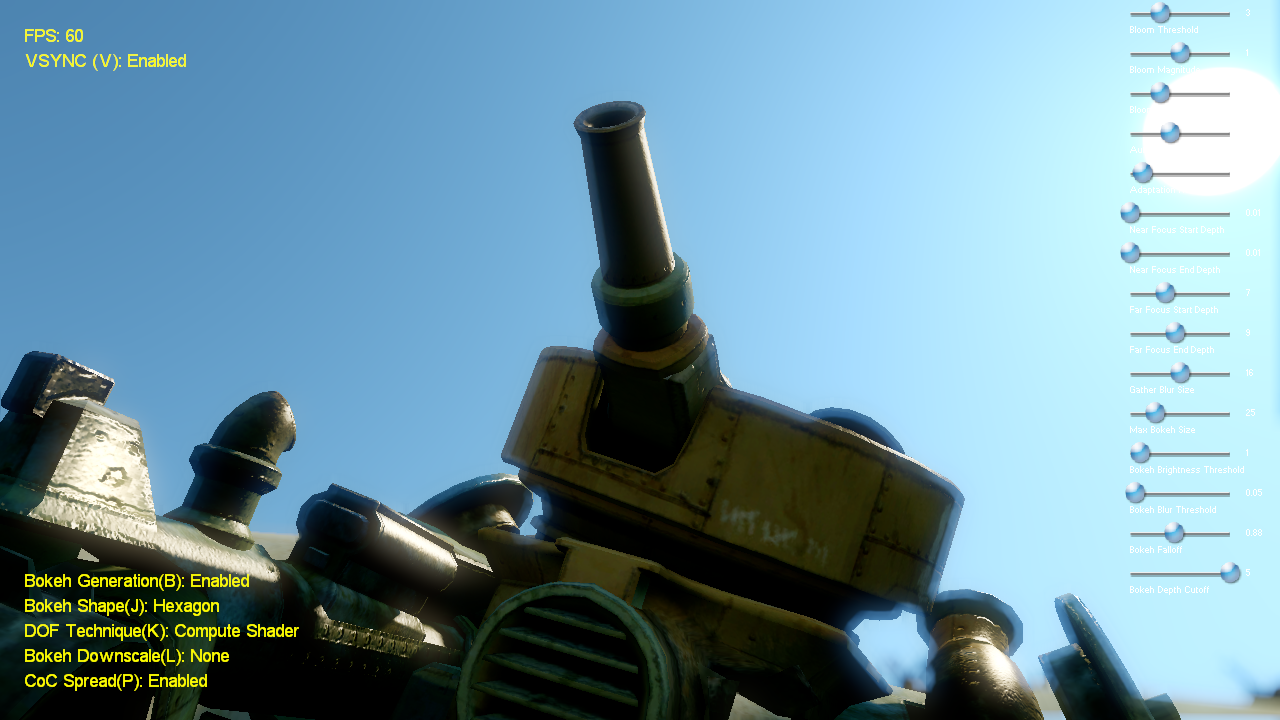

Good blur - in the last sample I used either a 2-pass blur on a poisson disc performed at full resolution, or a bilateral Gaussian blur performed at 1/4 resolution (both done in a pixel shader). The former is nice because it gives you variable filter width per-pixel, but you get some ugly noise-like artifacts due to insufficient sampling. Performance can really take a nose dive with too many samples, especially if your filter radius is very large. Doing two passes helps a lot, but gives you artifacts like the one pictured below:

The traditional “gaussian blur at 1/4 res” approach sucks even worse. This is because the lower resolution screws up the bilateral filtering, and performance also isn’t so great in a pixel shader due to the high amount of texture samples required. But worst of all, it just plain doesn’t look good due to using a lerp to blend between blurred and non-blurred pixels to simulate “in-focus” and “out-of-focus”. It ends up looking more like soft focus, rather than an image that’s gradually coming in or out of focus. On top of that you get aliasing artifacts from working at a lower res, which causes shimmering and swimming.

My solution to this problem was to implement monstrous 21-tap seperable bilateral blur in a compute shader. Wide, seperable blur kernels are a pretty nice fit for compute shaders because you can store the texture samples in shared memory, which allows each thread to take a single texture sample rather than N. Shared memory isn’t that quick, but for larger kernels (10 pixels or so) the savings start to win out over a pixel shader implementation. With such a wide blur kernel, I could perform the blurring at full resolution in order to provide nice sharp edges when the foreground is in-focus and the background is out-of-focus. Here’s a similar image to the one above, this time artifact-free:

I ended up just using a box filter rather than a Gaussian, which normally gives you ugly box-shaped highlights on the bright spots. Fortunately the bokeh does a great job of extracting those points and replacing them with the bokeh shape. To avoid having to lerp between blurred and un-blurred versions of the image, I had the filter kernel reject samples outside of the CoC size of the pixel being operated on. This effectively gives you a variable-sized blur kernel per-pixel, which gives you much nicer transitions for objects moving in or out of focus. It doesn’t look quite as good as the transitions for the disc-based blur, but I think it’s a fair trade off. The image below shows what the kernel looks like as it transitions from in-focus to out-of-focus:

** Out-of-focus foreground objects** - As in most DOF implementations, foreground objects that were out-of-focus were blurred, but still had hard edges. This can look pretty bad, as the object blurring into the background is a key part of simulating the look. A simple way to rectify this issue is to store your CoC size or blurriness factor in a texture, then blur it in screen space. This is essentially the approach used by Infinity Ward for the past few Call of Duty games produced by them. I went with something similar, except like with the DOF blur I used a compute shader to do a 21-tap seperable blur of the CoC texture. To make this look good you have to make sure you only gather samples coming from a lower depth, and that have a larger CoC than the pixel being operated on by that thread. Here’s an out-of-focus foreground object with and without the CoC spreading:

CoC Blur Off:

CoC Blur On:

No depth occlusion for bokeh sprites - I didn’t do any sort of occlusion testing for bokeh sprites in the sample, and waved it off by saying it would be simple to use the depth stencil buffer and enable depth testing. While that’s true, it turns out that doing it that doesn’t really give you great results. The problem is that if a bokeh sprite is covering an area that’s totally out of focus, you don’t want those out-of-focus pixels occluding the bokeh sprites. Otherwise the bokeh just not blend in at all with the pixels that are blurred by the DOF blur pass. So instead I implemented my own depth occlusion function that attenuates based on depth, but removes the attenuation if the pixel is out-of-focus. This let me keep the same overall look, while removing cases where I was getting “halos” due to the bokeh sprites rendering on top of in-focus objects. And since I was doing it in the shader anyway, I threw in a “soft” occlusion function rather than a binary comparison.

Without occlusion:

With occlusion:

Code and binaries are available here: https://github.com/TheRealMJP/DX11Samples/releases/tag/v1.4

4/22/2011 - Fixed a texture sampling bug on Nvidia hardware

Comments:

Michael -

Hi, Just downloaded the update and it is working fine now with both nVidia and ATI . Just a quick question, how hard would it be to incorporate your Motion blur Sample with this? As I think this will make it a complete DX11 based postfx framework!

#### [MJP](http://mynameismjp.wordpress.com/ "mpettineo@gmail.com") -

@Michael, Thanks for trying it out, and for saying it looks nice! Fortunately I now have a GTX 470 at work, so I can debug Nvidia issues. It turns out that the blockiness was happening because I was sampling the textures at integer locations rather than at 0.5, and I guess on Nvidia sampling off-center like that can cause issues. @Maciej Wow, that’s pretty bad! It runs at around 160fps on my 6950, with the bulk of the frame time actually spent rendering the scene + shadow maps.

#### [Maciej]( "msawitus@gmail.com") -

Very nice article & demo! I only wish performance was a bit better (13 fps on ATI 5650).

#### [LieblingsAlgorithmen – Links | Echtzeitgrafiker Magdeburg](http://echtzeitgrafiker.yojimbo.de/?p=47 "") -

[…] Bokeh II – Fake Bokeh – (mit source) […]

#### [Louis Castricato]( "ljcrobotic@yahoo.com") -

Can someone convert the sample code into SlimDX? I don’t know anything (yet) about C , and I wouldn’t wanna miss out on an amazing sample like this!

#### [MJP](http://mynameismjp.wordpress.com/ "mpettineo@gmail.com") -

Hey Michael, The link works for me, but Skydrive is terrible so I’m not surprised it doesn’t work. I uploaded the .zip to a new Codeplex project I made, so give that link a try.

#### [Michael]( "jayben71@gmail.com") -

You are the Man! awesome update, cannot wait to see this however the download link seems broke…

#### [Michael]( "jayben71@gmail.com") -

Hi MJP, Yep Codeplex is fine I managed to download it. I am running nvidia gtx480 and ATI 6870 windows 7. Looks great with ATI, not so much with nVidia. nvidia looks blocky with major edge aliasing like the final render/color buffer is set at a very low res (1/4?) when Computeshader is enabled, the other variants seem fine. Great improvement overall in quality. Would it be possible to add an option to change the size of the taps when using Computeshader blur?

#### [Draft on depth of field resources | Light is beautiful](http://lousodrome.net/blog/light/2012/01/17/draft-on-depth-of-field-resources/ "") -

[…] I forgot to mention a second article of Matt Pettineo, where he suggests a combination of techniques to achieve a better result. An example of actual bokeh in a photo of […]

#### [OpenGL Insights « The Danger Zone](http://mynameismjp.wordpress.com/2012/08/05/opengl-insights/ "") -

[…] time ago Charles de Rousiers adapted my Bokeh Depth of Field sample to OpenGL, and we contributed it as a chapter to the recently-released OpenGL Insights. Bokeh is […]